In recent years, generative AI has gained significant attention across industries. While it has shown amazing results in applications like image generation, text synthesis, media generation, transformation of content between different media etc, the field of cybersecurity has recently started warming up to the idea of using Generative AI.

The question is: Where in cyber security does generative AI bring in real value?

In this article we will try to bring in a practitioner’s view which is drawn from real experience of using Generative AI as a solution to cyber security problems.

Understanding Generative AI

Before delving into the specifics of generative AI in cybersecurity, it’s essential to understand what generative AI is. Generative AI is a subset of artificial intelligence that focuses on training machines to generate content that appears to be created by humans. The most notable and celebrated example of generative AI is the Generative Adversarial Network (GAN), which consists of two neural networks – a generator and a discriminator – competing with each other. The generator creates content, and the discriminator’s job is to distinguish between real and generated content.

Generative AI is closely associated with GPT in today’s world. So, explaining GPT at this point will help to link the conceptual generative AI with a widely used technology. The full form of GPT is Generative Pre-Trained Transformer. So we find three terms here: Generative, Pre-Trained and Transformer.

As explained in the above paragraph, the machine learning model can generate human-like content and hence it is called Generative.

The term Pre-Trained comes from the technique used in deep learning that involves training a model first on a large amount of data then fine-tuning it on a specific task. In the case of GPT, the model is pre-trained on a massive amount of text data, such as books, articles, and web pages, to learn the statistical patterns and structures of natural language. This pre-training phase is critical because it allows the model to develop a general understanding of language that can be applied to different tasks. Because Pre-Training takes a very large amount of data and very large and expensive infrastructure, only few large technology companies/Govts create Pre-Trained models. After pre-training, the model is fine-tuned on specific language tasks, such as language translation, question-answering, or summarization. It is done by adding task-specific output layers and fine-tuning the weights of the pre-trained model on the task’s data. The fine-tuning phase enables the model to adapt to the specifics and requirements of the task, while still leveraging the general language knowledge learned during pre-training.

A Transformer model is a neural network that learns context and thereby meaning by tracking relationships in sequential data like words in a sentence, frames in a video. The key innovation of the transformer architecture is the Self-Attention mechanism, which allows the model to capture long-range dependencies between elements in a sequence, making it highly effective for tasks involving sequences of data, such as text. The transformer architecture is widely known for its scalability, ability to parallelize execution, and ability to model complex relationships in sequences. They were introduced in the paper “Attention is All You Need” by Vaswani et al. in 2017 and have since become a foundational architecture for various machine learning tasks. Stanford researchers called transformers “foundation models” in an August 2021 paper because they see them driving a paradigm shift in AI.

-> Hackers Won't Wait For Your Next Pen Test: Know Automated Continuous Pen Test

Generative AI Potential In Cyber Security

Since the introduction of ChatGPT, the influence of Large Language Models (LLMs) has raised the curiosity and expectation of the industry to the potential of generative AI to revolutionize cybersecurity products and operations. However, the unique nature of security data, often locked in silos and bound by privacy constraints, has posed challenges in acquiring high-quality, comprehensive datasets for training LLM models. As a result, the adoption of these technologies has been somewhat fragmented.

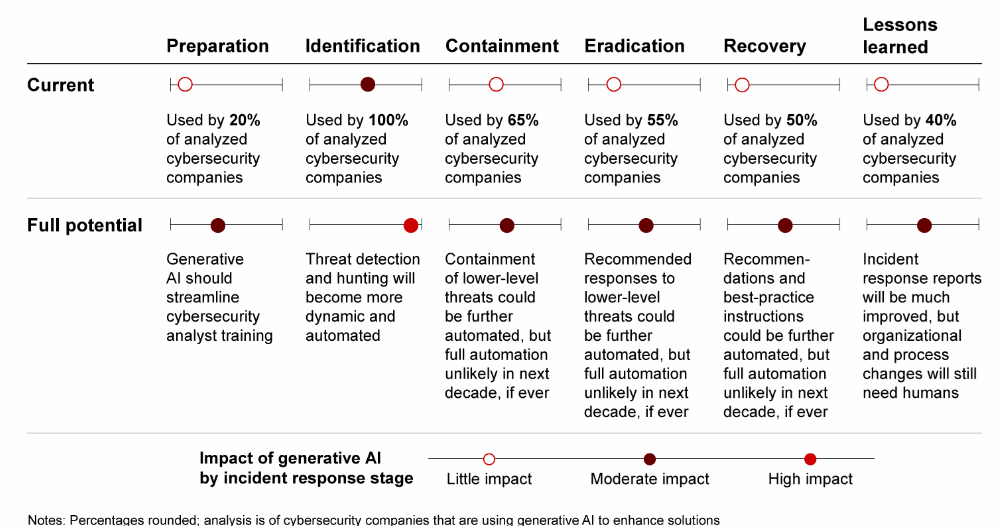

When examining the various stages of cybersecurity operations through the lens of SANS’ six stages of incident response (preparation, identification, containment, eradication, recovery, and lessons learned), it becomes evident that generative AI holds the most promise in the realm of Threat Identification. Security analysts have already embraced generative AI as a valuable tool for swiftly detecting and accurately assessing the scope and potential impact of cyberattacks. For instance, it aids analysts in efficiently filtering incident alerts, leading to a reduction in false positives.

Furthermore, the evolving landscape of generative AI suggests that its ability to detect and proactively hunt for threats is poised to become increasingly dynamic and automated. This promises to enhance the efficacy of cybersecurity operations, helping organizations stay ahead of the evolving threat landscape.

A recent research shows the state of use of generative AI in the six SANS stages of incident response. It can be clearly seen that the immediate potential is highest in the area of threat identification.

>>Click Here To Get The Report Of Gartner Hype Cycle For Attack Surface Management

In the context of the SANS framework’s containment, eradication, and recovery stages, the adoption rates among analyzed cybersecurity companies exhibit variability, ranging from roughly one-half to two-thirds, with containment showing the most progress. In these phases, generative AI is actively bridging knowledge gaps by equipping analysts with guidance and recovery instructions drawn from proven strategies applied in prior incidents. Enhancing automation within containment, eradication, and recovery plans promises further advancements.

The long-term impact of generative AI in these domains is anticipated to be moderate, with an ongoing need for human oversight to ensure its effectiveness.

Generative AI is also finding utility in the lessons-learned stage, streamlining the generation of incident response reports, thereby enhancing internal communication. These reports can be looped back into the model, contributing to bolstered defense mechanisms. For example, Google’s Security AI Workbench, fueled by the Sec-PaLM 2 LLM, transforms raw data from recent attacks into both machine-readable and human-readable threat intelligence, expediting responses (with human supervision). While human involvement is expected to decrease, it remains a vital component of the process.

>>Click Here: To Get The Report Of Gartner Hype Cycle For Penetration Testing & Red Teaming

Key points To Consider Before Adopting Generative AI

For Security Strategy And Governance

- Generative AI won’t eliminate the operational and technical intricacies inherent in cybersecurity.

- It’s crucial to consistently include generative AI adoption in cybersecurity as an agenda item for board and C-suite meetings.

- Avoid the pitfalls of exclusively fixating on the integration of generative AI in cybersecurity without considering the wider cybersecurity context of the organization.

For Security Operations

- Engage security operations (SecOps) in the verification of generative AI outputs.

- Provide training to SecOps personnel in threat detection using both generative AI and traditional methods to prevent overreliance and evaluate result quality.

- Ensure diversity in generative AI models used throughout the cybersecurity infrastructure, avoiding dependence on a single model.

For Cybersecurity Companies

- Protect against deceptive content generated by generative AI (hallucinations).

- Safeguard against external interference with generative AI algorithms and models that could introduce backdoor vulnerabilities.

>>Click Here To Get The Report Of Gartner Hype Cycle For Security Operations 2023 Report

By: Arnab Chattopadhyay, FireCompass

About FireCompass:

FireCompass is a SaaS platform for Continuous Automated Pen Testing, Red Teaming and External Attack Surface Management (EASM). FireCompass continuously indexes and monitors the deep, dark and surface webs using nation-state grade reconnaissance techniques. The platform automatically discovers an organization’s digital attack surface and launches multi-stage safe attacks, mimicking a real attacker, to help identify breach and attack paths that are otherwise missed out by conventional tools.

Feel free to get in touch with us to get a better view of your attack surface.